SPARC Lab

Tufts SPARC (Safe & Performant Autonomous Robotics & Control, pronounced like “spark”) Lab.

Research Vision: develop safe, deployable, and trustworthy robot autonomy with the goal of enabling robots to capably and confidently work alongside humans.

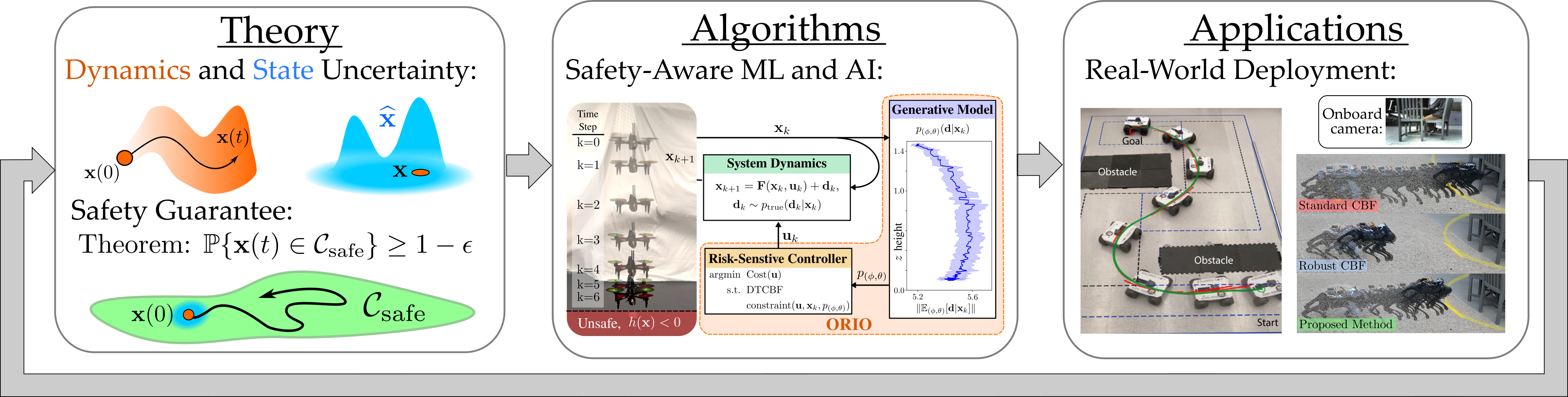

Research Philosophy: Our research follows a theory-algorithm-application loop. We develop application-motivated theory and use that to design provably sound algorithms that we deploy on real-world systems. This iterative process deepens theoretical understanding and applied expertise, while ensuring that research remains rigorously accurate, computationally feasible, and practically impactiful.

Work with us:

- Prospective PhD students: I’m happy to share that I’m recruiting talented and driven PhD students to join my lab! I will review and admit students in the 2025-2026 application cycle for a Fall 2026 start date. If you have a strong background in robotics, machine learning, and/or control theory, please apply to the Tufts Mechanical Engineering PhD program and mention my name in your application. I’m looking forward to meeting you!

- Tufts undergraduates and masters students: If you are interested in research opportunities, please email me at ryan.cosner@tufts.edu and let me know about your research interests and background. Let’s do some cool robotics together!

- Please see the our Q&A page for more info about the lab.

Research Directions

- Real-world Safety Guarantees: develop safety guarantees for robotic systems based on physically-grounded assumptions, rather than idealized mathematical models. By integrating interdisciplinary robustness metrics from fields like computer vision, machine learning, and control, we aim to enable confident, real-world deployment of safety-critical robots.

- Lifelong Safety in Novel Environments: build systems that synthesize safety constraints from sensor data on the fly and reason about risk to balance performance and conservatism. Our goal is to enable robots to safely explore unfamiliar environments, improve over time, and maintain reliability across both new and familiar scenarios.

- Human-Interactive Safety Guarantees: develop autonomous systems that reason about uncertainty, social norms, and shared responsibility in partially observable, multi-agent environments. By combining risk-aware prediction with safe, life-long learning, we aim to build robots that interact fluently and safely with humans in everyday settings.